Intelligent Intake & Decision Logic Architecture

A method for transforming ambiguous intake into scalable, defensible decisions

What this method is

Intelligent Intake & Decision Logic Architecture is a method for structuring how information enters a system so downstream decisions are coherent, scalable, and defensible.

It is used when organizations rely on fragmented intake sources, inconsistent evaluation rules, or manual judgment to make decisions that should be fast, predictable, and repeatable.

The method converts scattered inputs and subjective calls into a structured decision system that can scale without losing judgment quality.

The core problem this method addresses

Most teams assume their bottleneck is volume.

In practice, the constraint is almost always ambiguity.

Common symptoms include:

multiple intake sources collecting different information

inconsistent or incomplete data

unclear evaluation criteria

judgment calls made in Slack or meetings

downstream teams compensating for upstream uncertainty

When intake is poorly structured, decisions that should take seconds expand into reviews, escalations, and rework.

The system becomes neither fast nor trustworthy.

The core premise

Decisions do not scale through effort. They scale through structure.

If intake is inconsistent, no amount of automation, tooling, or staffing will produce reliable outcomes.

Intelligent Intake & Decision Logic Architecture treats intake not as a form problem, but as a decision system design problem.

Diagnose fragmentation at the intake layer

The first step is mapping how information actually enters the system.

This includes:

every intake source

every field variation

every manual override

every implicit decision point

every place humans intervene to compensate for missing structure

The goal is to identify where ambiguity is introduced — and how it propagates downstream.

Most systems fail here, long before decisions are made.

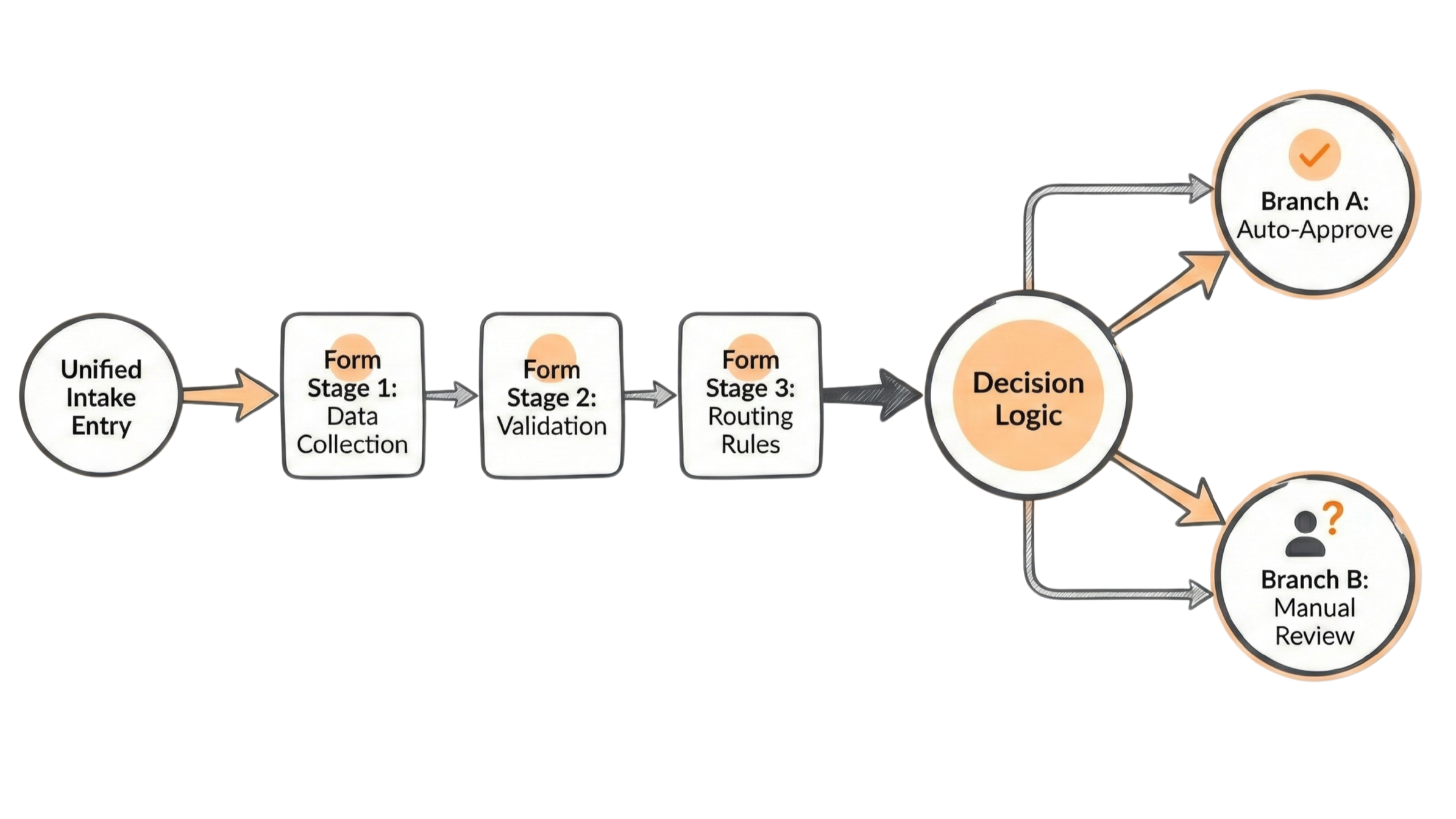

Design a structured intake architecture

Once fragmentation is visible, intake is redesigned as a multi-stage system, not a single front-loaded form.

A typical structure includes:

Stage 1: clean data capture

Stage 2: validation and error-proofing

Stage 3: decision-relevant inputs and routing signals

Each stage collects only what is required at that point in the decision process.

This sequencing reduces noise, improves data quality, and sharply lowers cognitive load.

Ambiguity drops because the system no longer asks for everything at once — only what matters, when it matters.

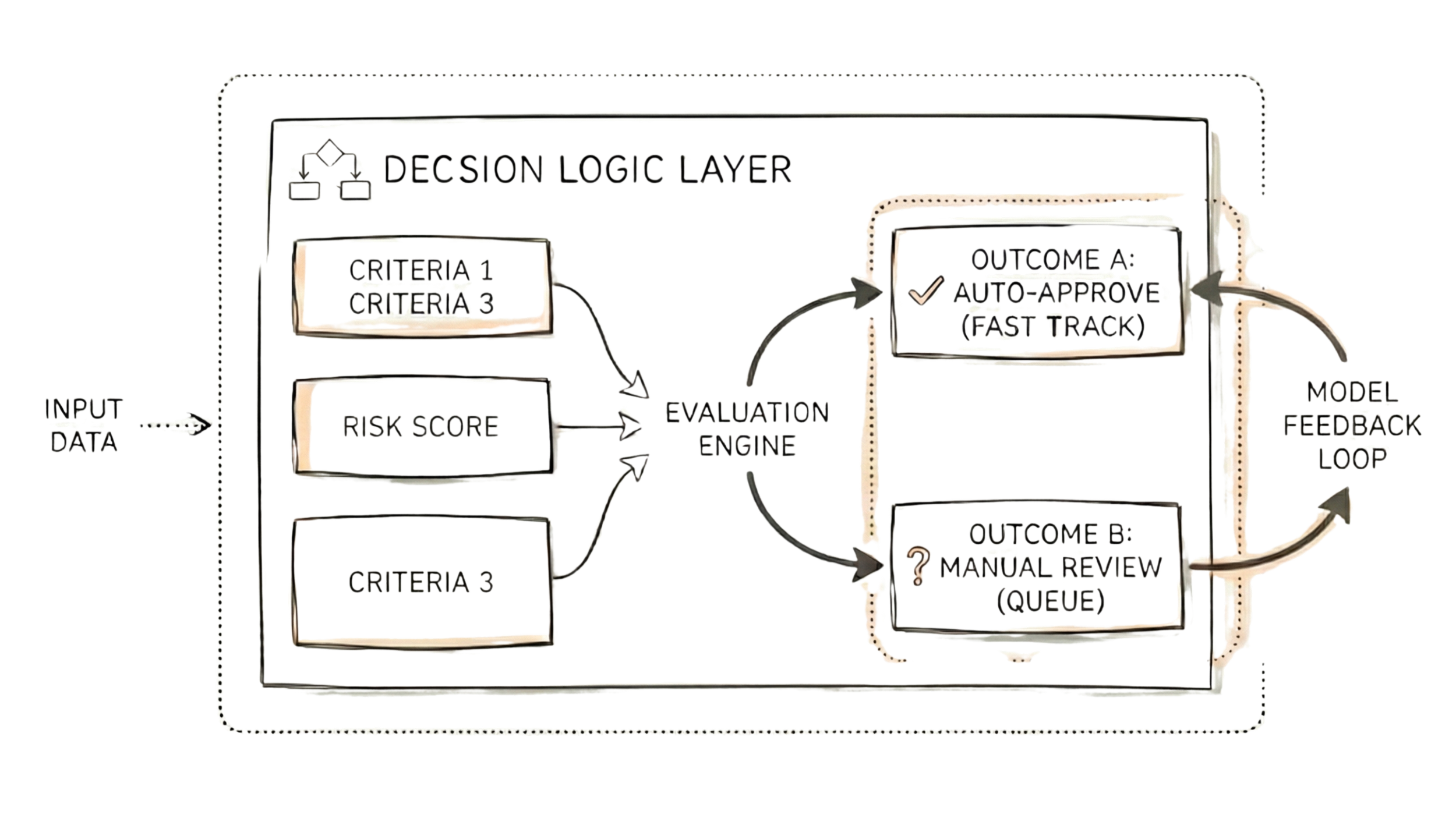

Build an explicit decision logic layer

With structured intake in place, the method introduces a decision logic layer that makes evaluation rules explicit.

This typically includes:

clear criteria definitions

thresholds and disqualifiers

risk indicators

completeness checks

weighted evaluation rules

Instead of relying on tacit judgment, the system produces legible outcomes based on known logic.

Common outputs include:

auto-approval or fast-track paths

structured queues for manual review

explicit reasons for routing or escalation

Judgment is not removed — it is contained and governed.

Create learning without instability

A critical component of the method is designing feedback loops that allow decisions to improve over time without breaking the operational model.

This ensures:

evaluation criteria can evolve

thresholds can be adjusted

edge cases can be incorporated

…without reintroducing chaos or manual workarounds.

The system learns while remaining stable.

What changes when intake and logic are explicit

When intake and decision logic are properly structured, systems behave differently:

decisions happen faster with fewer escalations

downstream teams receive clean, reliable inputs

manual review volume drops

routing becomes predictable

leadership gains confidence in how decisions are made

Most importantly, scale no longer increases risk.

The Work

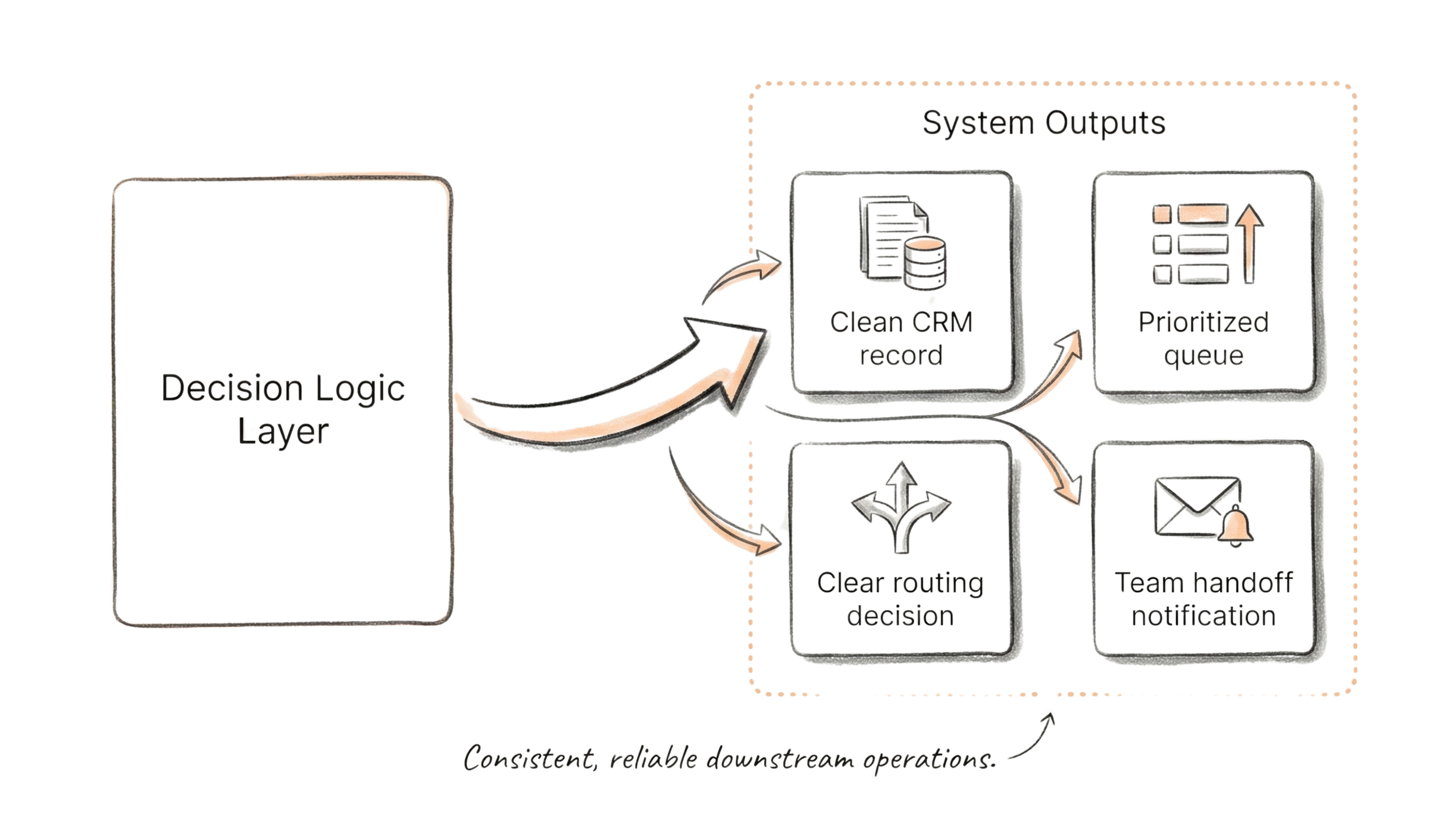

Relationship to downstream operations

A clean decision layer allows downstream systems to function as intended.

Reliable intake and logic enable:

accurate CRM records

prioritized queues

consistent handoffs

automated notifications and workflows

Downstream teams no longer compensate for upstream ambiguity — the system carries the load.

Where this method is most applicable

Intelligent Intake & Decision Logic Architecture is particularly effective when:

decisions rely heavily on judgment

intake volume is increasing

multiple teams depend on shared evaluation rules

automation initiatives keep stalling

downstream errors trace back to upstream inconsistency

It is often foundational to automation, AI, or productization — but delivers value even without those layers.

What this method is not

It is not a form redesign.

It is not a rules engine bolted onto chaos.

It is not automation-first.

It is not a tool-selection exercise.

It is a method for making decision logic explicit, governable, and scalable.

How this method is used

Intelligent Intake & Decision Logic Architecture is a foundational method used within broader decision audits, attribution design, and system-framing work where intake quality determines execution quality.